Collaborative Control for Geometry-Conditioned PBR Image Generation

Shimon Vainer, Mark Boss, Mathias Parger, Konstantin Kutsy, Dante De Nigris, Ciara Rowles, Nicolas Perony, Simon Donné

February 2024, Arxiv

January 2023, with the PhD in my pocket, I joined Unity to work on real-time NeRFs. It sounded like the perfect job - I am a big video game enthusiast and already developed several games with Unity, the task is a perfect fit for me and the team I am joining is awesome. Unfortunately, 2023 was not a good year for Unity, with multiple rounds of lay-offs and cost savings that also included our project. But that also created new opportunities for us. Unity started pushing AI features and we got the chance to get familiar with diffusion models and the current state-of-the-art in asset generation. The pace at which new and better approaches are popping up is unbelievable - and it is hard to keep up with the latest news in that area.

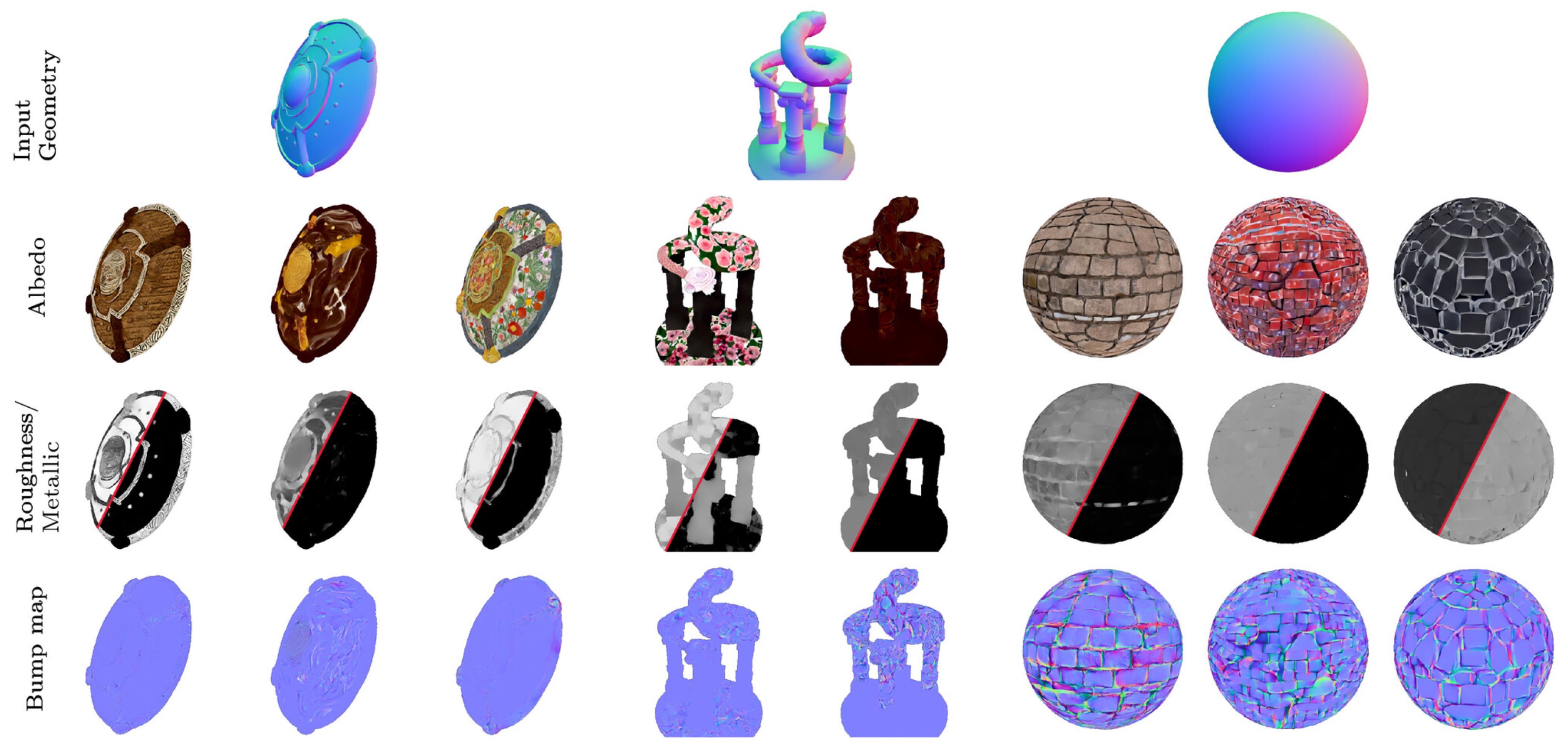

One topic that we saw great potential in is creating textures from text for existing 3D objects. Early approaches create inconsistent appearance around the object, but that is now fixed in newer approaches. However, nearly all text-to-texture approaches create only RGB output. That can be enough for mobile / cartoon-style games, but more realistic graphics require PBR materials for correct shading. Some approaches try to estimate PBR properties as a post-process, but that is an ill-posed problem and does not produce great results. Predicting PBR texture channels together with RGB sounds much more promising.

But training a diffusion model from scratch is costly and requires lots of training data - which we simply don’t have. Training with less training data reduces the model’s ability to create convincing outputs from text, especially for out-of-distribution samples. So, what we do instead in this paper, is to use a frozen stable diffusion (SD) model and pair it with a trainable PBR model. Our cross-network communication combines the state of both models after every self-attention layer, applies a linear layer to the state and then distributes the output back to the two models. This way, the SD model does not suffer from catastrophic forgetting, but is still able to communicate with the PBR model to settle for a common texture appearance.

Congrats to Shimon for his first first-author paper. And congrats to the rest of the team for the great team effort! I hope our paths cross again soon! :)