DeltaCNN: End-to-end CNN inference of sparse frame differences in videos

Mathias Parger, Chengcheng Tang, Christopher D Twigg, Cem Keskin, Robert Wang, Markus Steinberger

June 2022, CVPR

DeltaCNN was my favorite project during my PhD. It’s the second research collaboration with the great team at Meta Reality Labs, and the topic is a perfect match for my skills and interests.

The idea is very intuitive: applying CNNs on video input is very expensive and requires powerful hardware. But typically, large parts of the image change only little between two frames (e.g., background), and processing them again every frame is redundant.

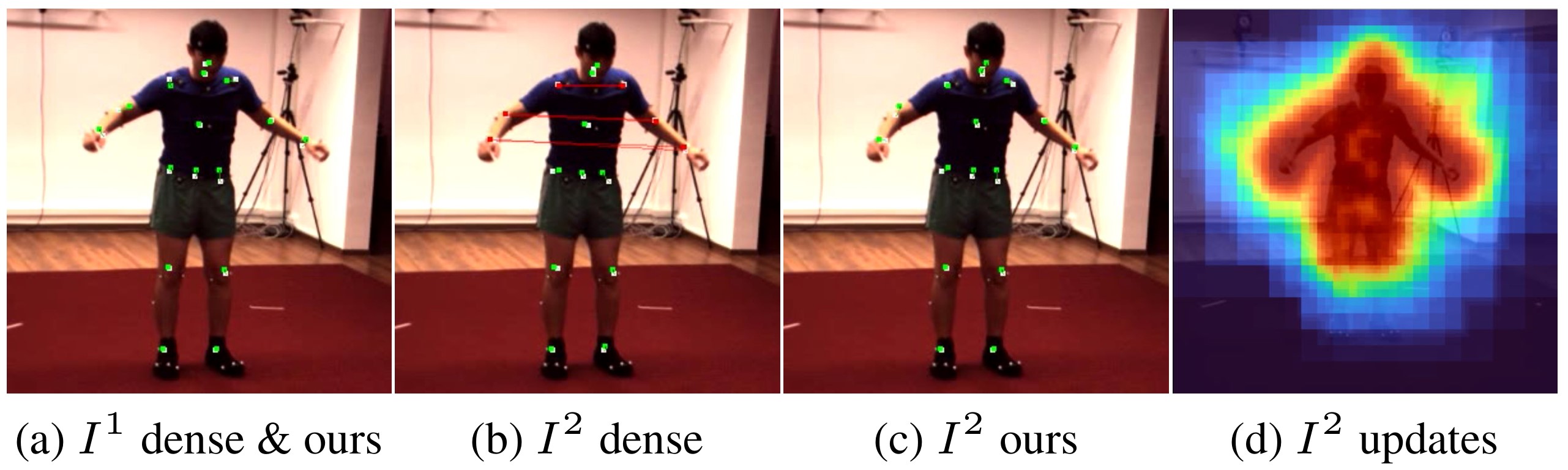

In DeltaCNN, we reuse previous results for pixels which have changed only little since the last time they were processed. This way, we can achieve great FLOP reductions for static camera scenarios like webcams or surveillance cameras with only a marginal hit on accuracy. Previous work has already demonstrated the theoretical gains in terms of FLOP reductions, but typically neglects that a reduction in multiplications does not always mean that it runs faster on actual hardware. cuDNN is fast…really fast. Implementing a convolution kernel that supports sparse inputs and tries to minimize FLOPs is very difficult - if not impossible. Furthermore, some previous research only focuses on convolutions and considers multiplications the only expensive operation. But in practice, memory bandwidth is at least as much of a bottleneck - and most layers of the CNN are only limited by memory bandwidth (activation, normalization, add, concatenate, clone, etc.). Processing them sparsely can lead to great performance gains.

That’s where DeltaCNN proposes a new, practical approach. Sparse Delta (the difference between the last processed pixel values and the current pixel values) are propagated end-to-end, from the first to the last layer of the network. Compared to previous work, this saves a lot of conversions from dense “full” values to sparse “delta” values. And it allows us to perform all the memory bandwidth limited layers efficiently - which is very important, since these layers become the main bottleneck once the convolution is processed sparsely.

Making this possible required a lot of engineering effort. Thousand of lines of CUDA code and months of trial-and-error for performance tuning. But the final result was worth it: DeltaCNN is as fast as cuDNN in dense mode (and often even faster, at least in PyTorch), but it achieves speedups of up to 7x for sparse updates.

But there is one major limitation: DeltaCNN only works well for static cameras. Even with slow camera motion, most of the pixels require updates - essentially resulting in mostly dense updates. In our follow-up project MotionDeltaCNN, we tackle this issue.