UNOC: Understanding occlusion for embodied presence in virtual reality

Mathias Parger, Chengcheng Tang, Yuanlu Xu, Christopher D Twigg, Lingling Tao, Yijing Li, Robert Wang, Markus Steinberger

December 2022, IEEE TVCG

UNOC was the first of 3 papers I created in collaboration with Meta Reality Labs. During the course of making this paper, I did a four month internship at Meta Reality Labs in Sausalito. This was one of the best experiences I made in my life - working together with great people in great office, and living in a beautiful apartment at the beach in Tiburon.

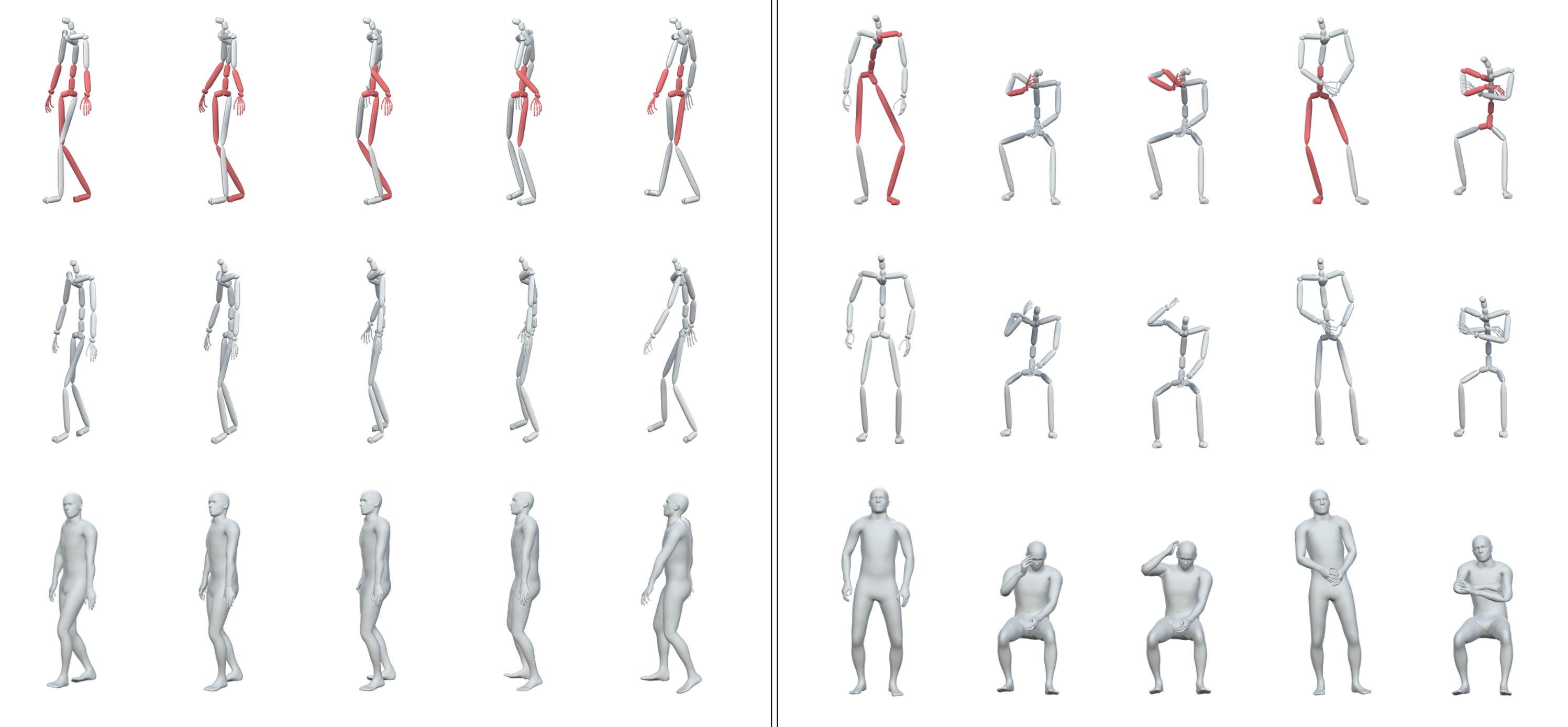

The goal of that project was to improve stability of full body pose estimation in VR headsets. Tracking the full body using only the cameras mounted onto the headset is difficult, as large parts of the body are typically out of view or occluded by other body parts. Instead of working on an approach that tries to solve all problems at once, we tried a different approach - a differentiable postprocessing of poses that fix tracking errors. We decided that a neural network might be the best approach to tackle this problem. However, training data is limited and often does not include finger motion. So, we created our own dataset using a high quality Optitrack MoCap setup combined with NANSENSE gloves for robust finger tracking.

The final motion was cleaned up by a professional studio before the finger motion was fused with the body motion and calibrated in a post-processing step. This way, we were able to create a high quality dataset for motion which are very common in real life, but nearly absent in public datasets. Some examples include crossing arms or hands, resting the head on a fist, or scratching the forehead.

With our dataset, we trained a gated recurrent unit (GRU) to fill in poses of untracked joints using the set of currently tracked joints and the poses of previous frames as input. This way, we were able to greatly increase the final pose quality for our egocentric full body tracking simulation. Our dataset also proved to be useful to synthesize finger motion for datasets that don’t include fingers.