spECK: accelerating GPU sparse matrix-matrix multiplication through lightweight analysis

Mathias Parger, Martin Winter, Daniel Mlakar, Markus Steinberger

February 2020, PPOPP

This was my first project as PhD student and also the beginning of my passion for CUDA and performance optimization in general.

The task was simple: multiply two sparse matrices - given in CSR format - as fast as possible. Several solutions for this already exist, and as you might have expected, every approach is the best in its own evaluations. In contrast, our team wanted to implement a solution that is fast in all cases. So, we focused on decreasing the time required to process the entire SuiteSparse Matrix Collection. We made huge Excel tables with all kinds of statistics of the matrices (many thanks to Martin Winter) to figure out which approach works best for which kinds of matrices - and how we could use that knowledge to select the best suited approach on-the-fly.

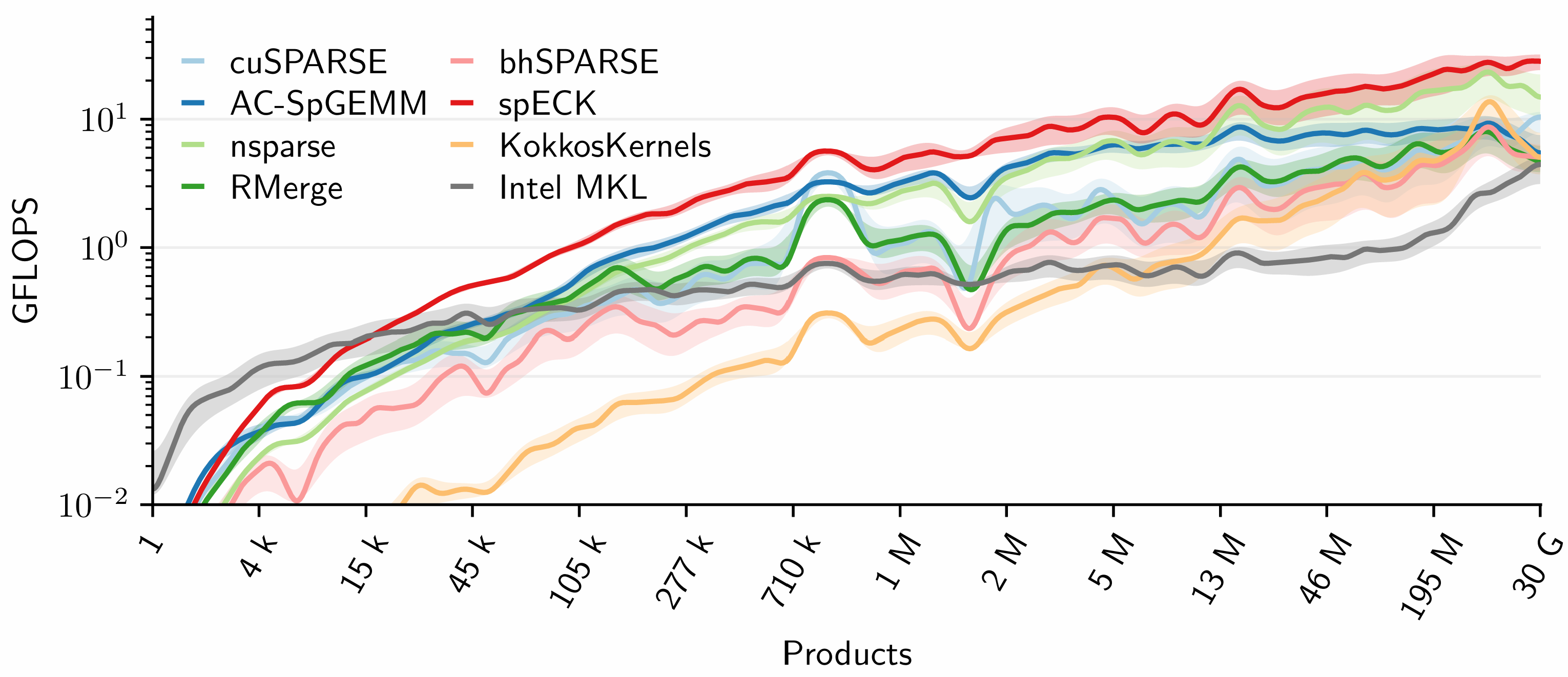

In the final version, we implemented a very simple analysis pass over the input matrices that gives me just enough information to decide which approach is best suited for the current input. But instead of doing this globally, we do this for every row (or group of rows) of the output matrix. And that’s already our main contribution: we select the best approach (out of three) for every row of the output, and estimate how to best distribute the work across the threads of a block. Combined with extensive amount of CUDA performance optimization, we were able to beat all other methods 80% of the time, and always performed closed to the best in the remaining cases.